Traditional control methods effectively manage robot operations using models like motion equations but face challenges with issues of contact and friction, leading to unstable and imprecise controllers that often require manual tweaking. Reinforcement learning, however, has developed as a capable solution for developing robust robot controllers that excel in handling contact-related challenges.

In this work, we introduce a deep reinforcement learning approach to tackle the soft-capture phase for free-floating moving targets, mainly space debris, amidst noisy data. Our findings underscore the crucial role of tactile sensors, even during the soft-capturing phase. By employing deep reinforcement learning, we eliminate the need for manual feature design, simplifying the problem and allowing the robot to learn soft-capture strategies through trial and error. To facilitate effective learning of the approach phase, we have crafted a specialized reward function that offers clear and insightful feedback to the agent.

Our method is trained entirely within the simulation environment, eliminating the need for direct demonstrations or prior knowledge of the task. The developed control policy shows promising results, highlighting the necessity of using tactile sensor information.

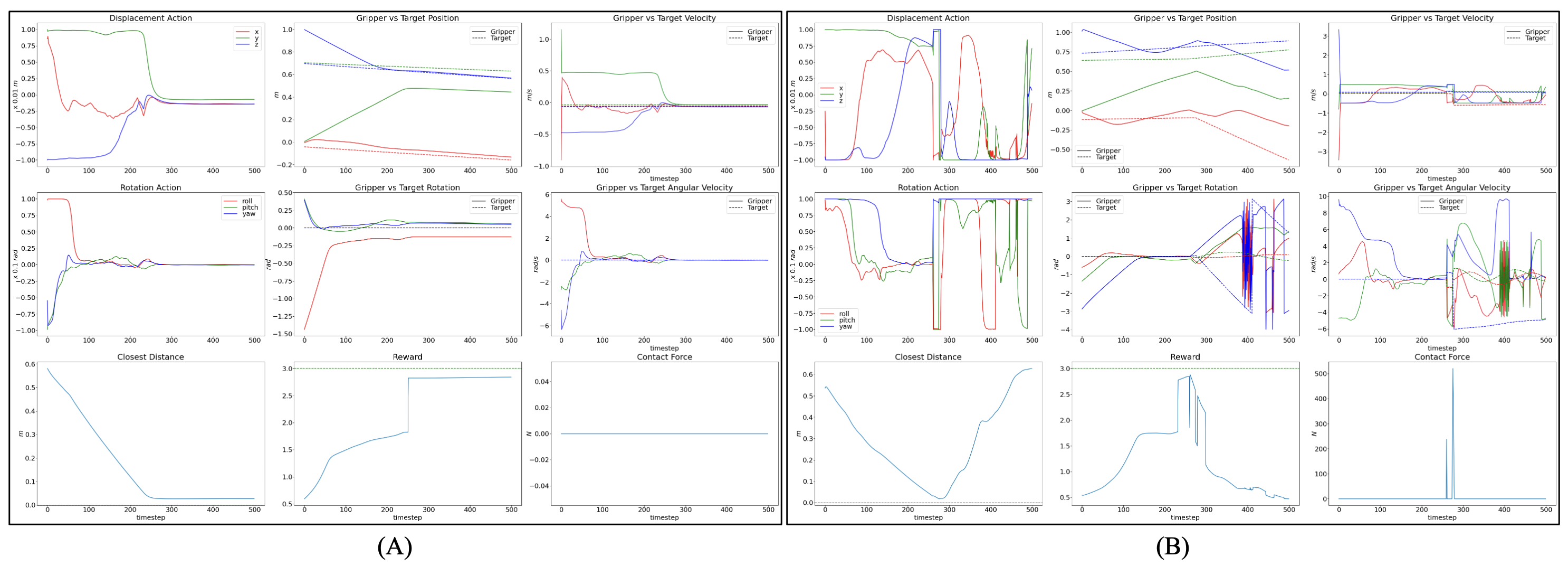

To evaluate the effectiveness of tactile feedback in the soft-capture phase, we conducted a series of experiments in a simulated environment. The robot was tasked with pre-capturing a moving free-floating target using a Robotiq 3F gripper.The robot was trained using the Soft Actor-Critic algorithm in a simulated environment. The results show that the robot's performance significantly improved when tactile feedback was enabled. The robot was able to avoid collisions and position itself correctly for the soft-capture phase. The results demonstrate the importance of tactile feedback in improving the success rate of the soft-capture phase in space debris removal missions. Episode (A) shows the robot's behavior with tactile feedback enabled, while episode (B) shows the robot's behavior without tactile feedback.

Six different videos showing the robot's behavior after training. Some is equipped with tactile feedback, while others are not. The importance of tactile feedback is evident in the robot's behavior to avoid collisions and position itself correctly for the soft-capture phase.

Tactile feedback enabled - Example 1

No tactile feedback - Example 2

Tactile feedback enabled - Example 3

No tactile feedback - Example 4

Tactile feedback enabled - Example 5

No tactile feedback - Example 6

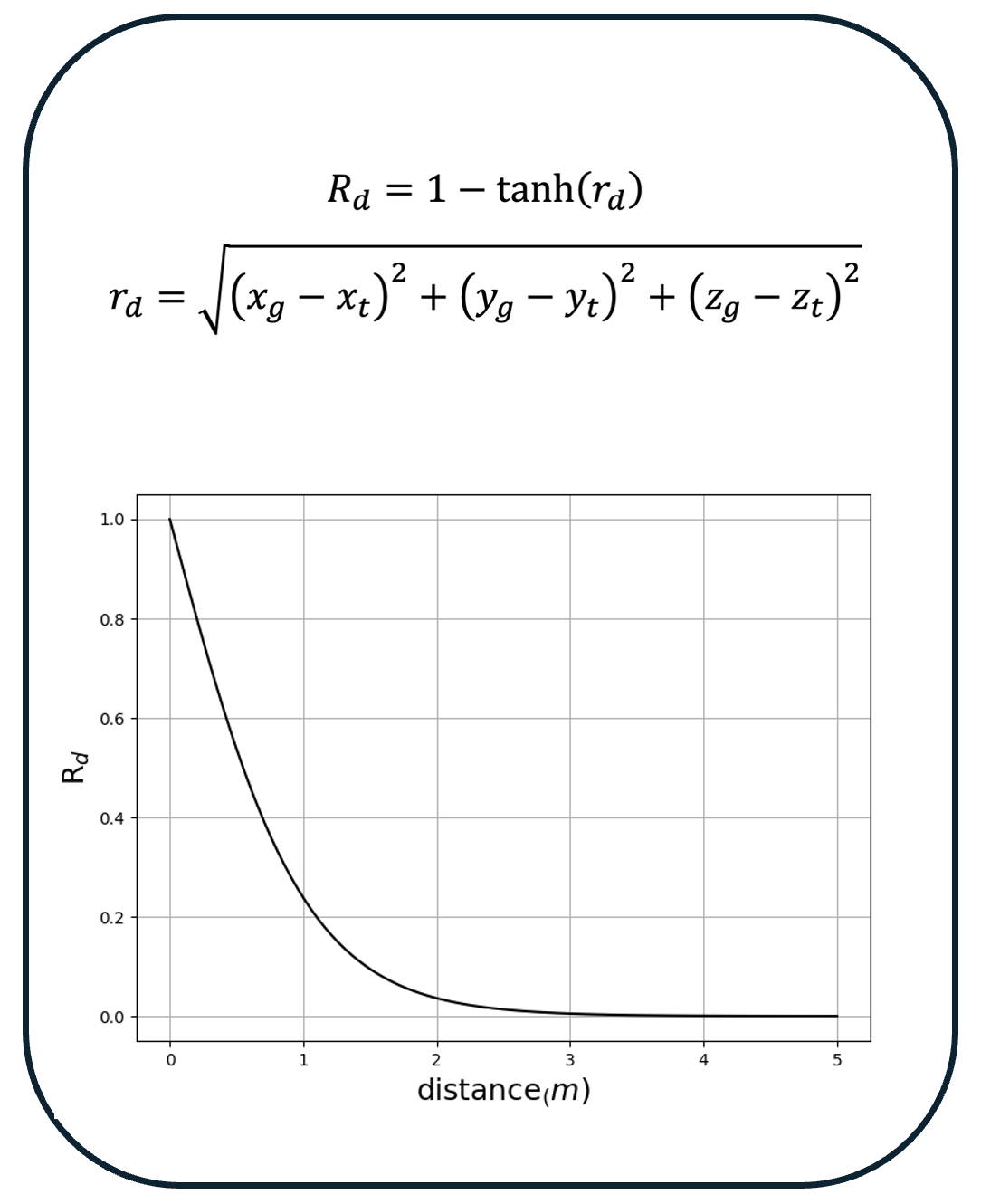

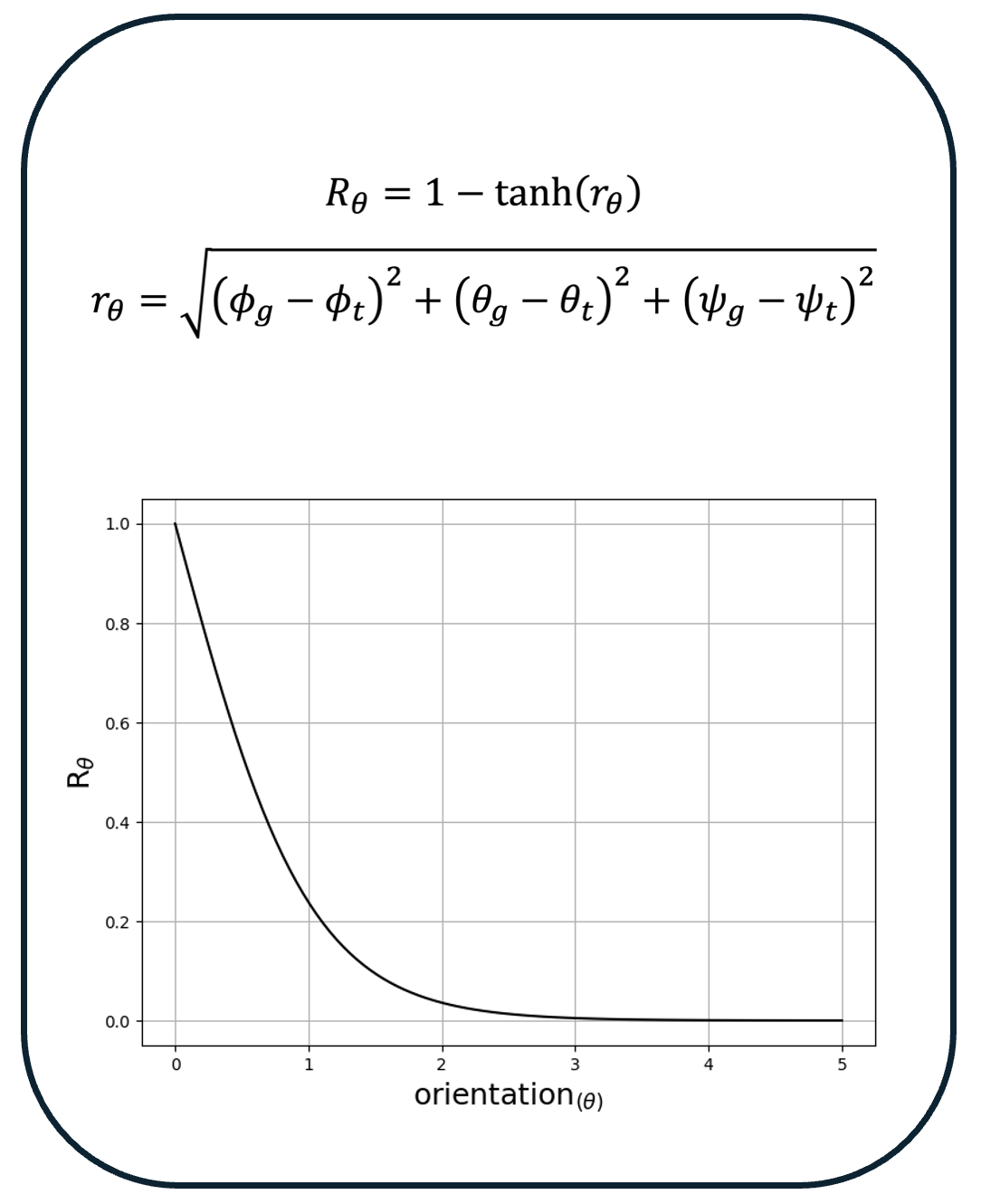

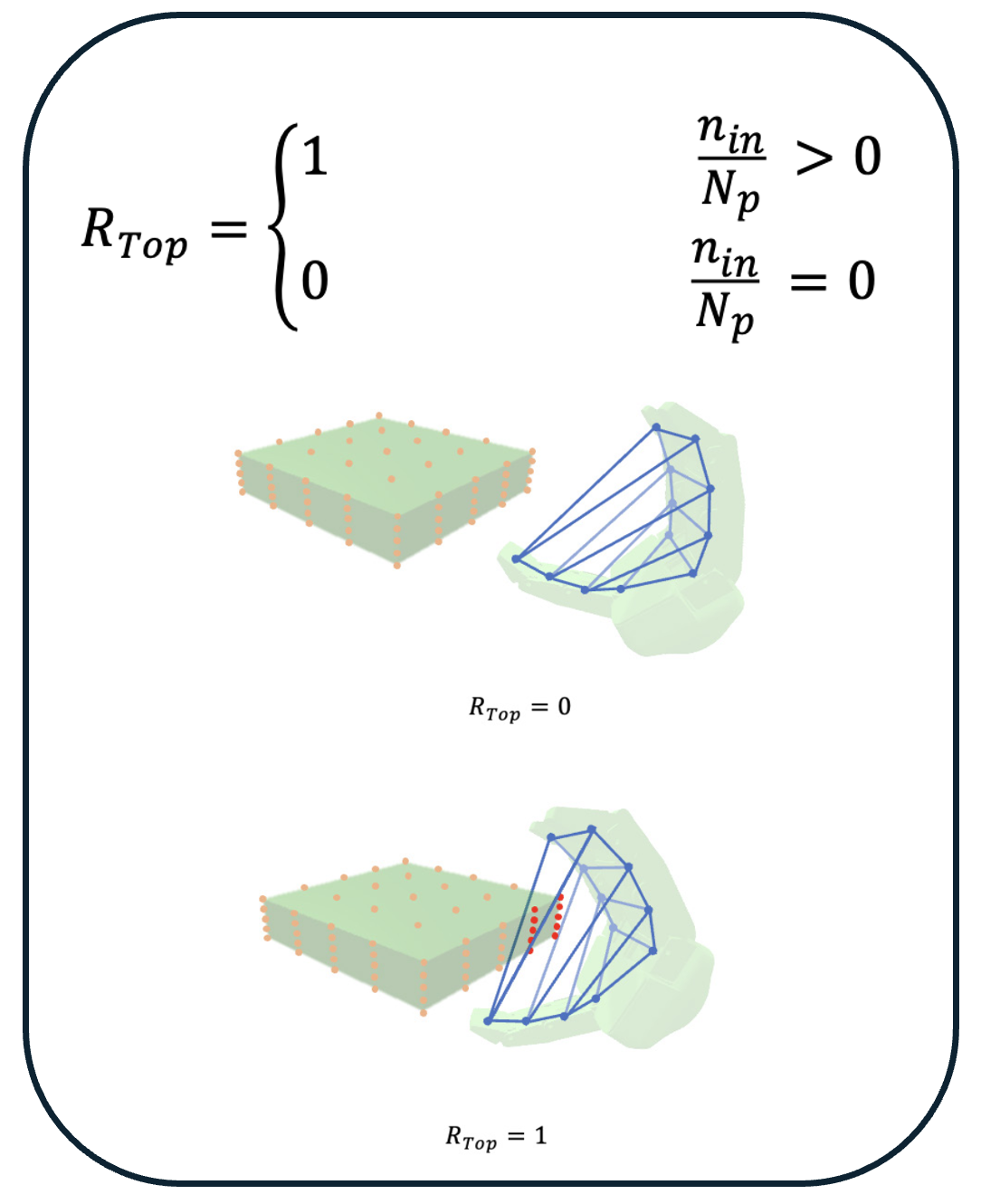

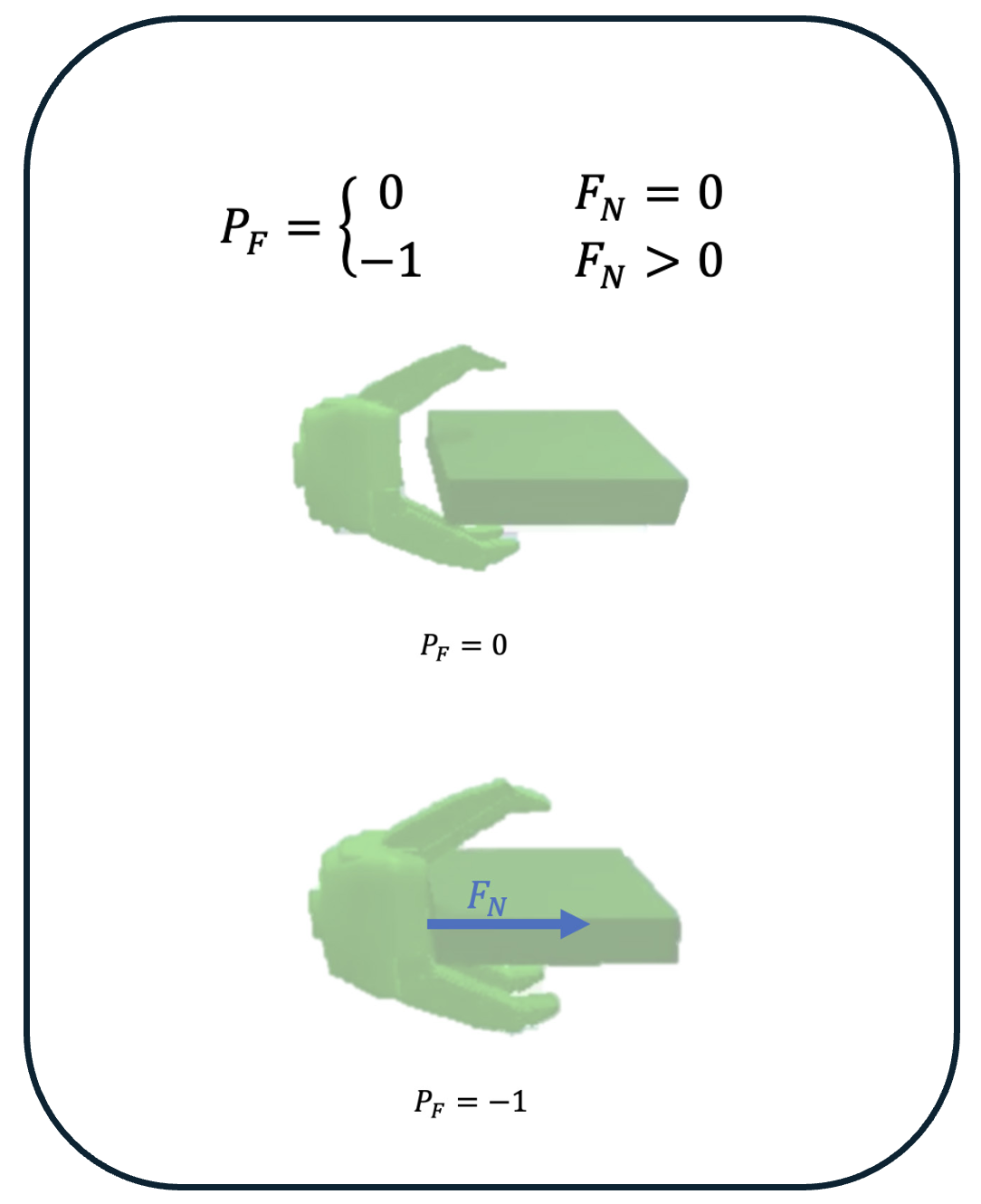

Four novel reward function components designed to drive the robot toward the target, follow it, and reach the pre-grasp position. Each component, normalized between 0 and 1, helps isolate its impact on the robot's behavior during training.

position correction

orientation correction

topology encouragement

contact penalization

@article{beigomi2024improving,

title={Improving Soft-Capture Phase Success in Space Debris Removal Missions: Leveraging Deep Reinforcement Learning and Tactile Feedback},

author={Beigomi, Bahador and Zhu, Zheng H},

journal={arXiv preprint arXiv:2409.12273},

year={2024}

}